Introducing Linstat

We're pleased to announce that on Monday, August 23rd we will make our next generation of Linux servers available to SSCC members. These servers are much faster than Kite, Hal and Falcon, and will have about three times as much RAM and eight times as much temp space. They will also be set up as a cluster, rather like Winstat, and thus we've named them Linstat.

Features of the New Linstat Servers

The Linstat servers have two Intel Xeon X5550 CPUs with four cores (for a total of eight physical processors) and are substantially faster than our current servers. They have 48GB of RAM and about 200GB of space in /tmp. All the Linstat servers run 64-bit Linux (like Falcon does now) which allows them to allocate much more memory to programs.

Connecting to Linstat

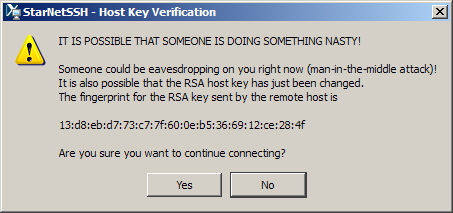

Attempts to connect to Kite, Hal and Falcon will be automatically redirected to Linstat, so you don't have to make any changes to how you connect. The first time you try to connect to one of the older servers and get Linstat instead, you will get a warning message. For example, X-Win32 will give you the following:

You can ignore this message and click Yes to continue connecting. Other client programs may ask you if you want to accept and save the new key. You should only get such a warning message once.

You can add a direct connection to Linstat by following the instructions in Connecting to SSCC Linux Computers using X-Win32. We will add this connection to copies of X-Win32 running on PCs in the Sewell Social Science Building that connect to the SSCC's PRIMO domain, but this process will probably not be completed on Monday morning.

The Linstat Cluster

When you log in to Linstat, you will not log in to a particular machine. Instead, you'll connect to linstat.ssc.wisc.edu and be directed to one of the three Linstat servers (linstat1, linstat2 and linstat3) automatically. This will spread users among the three servers and help avoid situations where one server is much busier than another.

If you are running a long job and need to connect to the same server again to monitor it, log in to Linstat and then type ssh server where server should be replaced by the name of the server where you started the job. (Be sure to remember which server you started your job on! Most people have the server name in their prompt, but if you don't you can find out which server you're using by typing printenv HOST.)

Condor

The Condor servers need to be redone in order to use 64-bit Linux for compatibility with Linstat. We'll also change their disk configuration to make more space available in /tmp. On Monday we'll have a small number of Condor servers ready for use, then add back the others (plus the current Kite, Hal and Falcon) as they're completed.

We appreciate how many of our users send their long jobs to Condor, but this has sometimes led to situations where Condor was very busy while the faster interactive servers had idle CPUs. Thus the Linstat servers will each take two Condor jobs from the pool. (We will monitor performance and may increase that number.)

Memory Usage

We've limited the amount of memory any one person can use to 24GB (i.e. half the server's RAM). This is more RAM than any of the current servers have. If you need to use more than that, get in touch with the Help Desk and we will discuss raising the limit for you, though you'll need to run your program at times when it will not interfere with other users.

/ramdisk

/ramdisk is a special "directory" that is actually stored in RAM, making it extremely fast. On Linstat the maximum size of /ramdisk is 24GB, which should make it useful for any application that needs fast disk access.

SAS

On Linstat, the default directory where SAS stores temporary data sets (the WORK library) is /ramdisk. This will increase the speed of data-intensive programs significantly. It will also prevent them from slowing down the server due to disk I/O bottlenecks.

If you need more than 24GB of temporary space, change the WORK directory to /tmp. You can do so by adding the -work option to your SAS command:

sas -work /tmp myprogram

You'll then be able to use up to 200GB of space.

Since SAS with /ramdisk as its WORK library is unlikely to cause disk I/O bottlenecks, it can be managed properly by Condor. The current condor_sas program will be retired on Monday, and condor_sas will go back to being a script that submits SAS jobs to Condor. These jobs can then be managed with condor_status, condor_rm, etc. (see An Introduction to Condor).